Create Expectations that span multiple Batches using Evaluation Parameters

This guide will help you create ExpectationsA verifiable assertion about data. that span multiple BatchesA selection of records from a Data Asset. of data using Evaluation ParametersA dynamic value used during Validation of an Expectation which is populated by evaluating simple expressions or by referencing previously generated metrics. (see also Evaluation Parameter StoresA connector to store and retrieve information about parameters used during Validation of an Expectation which reference simple expressions or previously generated metrics.). This pattern is useful for things like verifying that row counts between tables stay consistent.

Prerequisites

- A configured Data ContextThe primary entry point for a Great Expectations deployment, with configurations and methods for all supporting components..

- A configured Data SourceProvides a standard API for accessing and interacting with data from a wide variety of source systems. (or several Data Sources) with a minimum of two Data AssetsA collection of records within a Data Source which is usually named based on the underlying data system and sliced to correspond to a desired specification. and an understanding of the basics of Batch RequestsProvided to a Data Source in order to create a Batch..

- A Expectations SuitesA collection of verifiable assertions about data. for the Data Assets.

- A working Evaluation Parameter store. The default in-memory StoreA connector to store and retrieve information about metadata in Great Expectations. from

great_expectations initcan work for this. - A working CheckpointThe primary means for validating data in a production deployment of Great Expectations.

Import great_expectations and instantiate your Data Context

Run the following Python code in a notebook:

import great_expectations as gx

context = gx.get_context()

Instantiate two Validators, one for each Data Asset

We'll call one of these ValidatorsUsed to run an Expectation Suite against data. the upstream Validator and the other the downstream Validator. Evaluation Parameters will allow us to use Validation ResultsGenerated when data is Validated against an Expectation or Expectation Suite. from the upstream Validator as parameters passed into Expectations on the downstream.

datasource = context.sources.add_pandas_filesystem(

name="demo_pandas", base_directory=data_directory

)

asset = datasource.add_csv_asset(

"yellow_tripdata",

batching_regex=r"yellow_tripdata_sample_(?P<year>\d{4})-(?P<month>\d{2}).csv",

order_by=["-year", "month"],

)

upstream_batch_request = asset.build_batch_request({"year": "2020", "month": "04"})

downstream_batch_request = asset.build_batch_request({"year": "2020", "month": "05"})

upstream_validator = context.get_validator(

batch_request=upstream_batch_request,

create_expectation_suite_with_name="upstream_expectation_suite",

)

downstream_validator = context.get_validator(

batch_request=downstream_batch_request,

create_expectation_suite_with_name="downstream_expectation_suite",

)

Create the Expectation Suite for the upstream Validator

upstream_validator.expect_table_row_count_to_be_between(min_value=5000, max_value=20000)

upstream_validator.save_expectation_suite(discard_failed_expectations=False)

This suite will be used on the upstream batch. The observed value for number of rows will be used as a parameter in the Expectation Suite for the downstream batch.

Disable interactive evaluation for the downstream Validator

downstream_validator.interactive_evaluation = False

Disabling interactive evaluation allows you to declare an Expectation even when it cannot be evaluated immediately.

Define an Expectation using an Evaluation Parameter on the downstream Validator

eval_param_urn = "urn:great_expectations:validations:upstream_expectation_suite:expect_table_row_count_to_be_between.result.observed_value"

downstream_validator_validation_result = downstream_validator.expect_table_row_count_to_equal(

value={

"$PARAMETER": eval_param_urn, # this is the actual parameter we're going to use in the validation

}

)

The core of this is a $PARAMETER : URN pair. When Great Expectations encounters a $PARAMETER flag during ValidationThe act of applying an Expectation Suite to a Batch., it will replace the URN with a value retrieved from an Evaluation Parameter Store or Metrics StoreA connector to store and retrieve information about computed attributes of data, such as the mean of a column. (see also How to configure a MetricsStore).

When executed in the notebook, this Expectation will generate a Validation Result. Most values will be missing, since interactive evaluation was disabled.

expected_validation_result = {

"success": None,

"expectation_config": {

"kwargs": {

"value": {

"$PARAMETER": "urn:great_expectations:validations:upstream_expectation_suite:expect_table_row_count_to_be_between.result.observed_value"

},

},

"expectation_type": "expect_table_row_count_to_equal",

"meta": {},

},

"meta": {},

"exception_info": {

"raised_exception": False,

"exception_traceback": None,

"exception_message": None,

},

"result": {},

}

Your URN must be exactly correct in order to work in production. Unfortunately, successful execution at this stage does not guarantee that the URN is specified correctly and that the intended parameters will be available when executed later.

Save your Expectation Suite

downstream_validator.save_expectation_suite(discard_failed_expectations=False)

This step is necessary because your $PARAMETER will only function properly when invoked within a Validation operation with multiple Validators. The simplest way to execute such an operation is through a :ref:Validation Operator <reference__core_concepts__validation__validation_operator>, and Validation Operators are configured to load Expectation Suites from Expectation StoresA connector to store and retrieve information about collections of verifiable assertions about data., not memory.

Execute a Checkpoint

This will execute both validations and pass the evaluation parameter from the upstream validation to the downstream.

checkpoint = context.add_or_update_checkpoint(

name="checkpoint",

validations=[

{

"batch_request": upstream_batch_request,

"expectation_suite_name": upstream_validator.expectation_suite_name,

},

{

"batch_request": downstream_batch_request,

"expectation_suite_name": downstream_validator.expectation_suite_name,

},

],

)

checkpoint_result = checkpoint.run()

Rebuild Data Docs and review results in docs

You can do this within your notebook by running:

context.build_data_docs()

Once your Data DocsHuman readable documentation generated from Great Expectations metadata detailing Expectations, Validation Results, etc. rebuild, open them in a browser and navigate to the page for the new Validation Result.

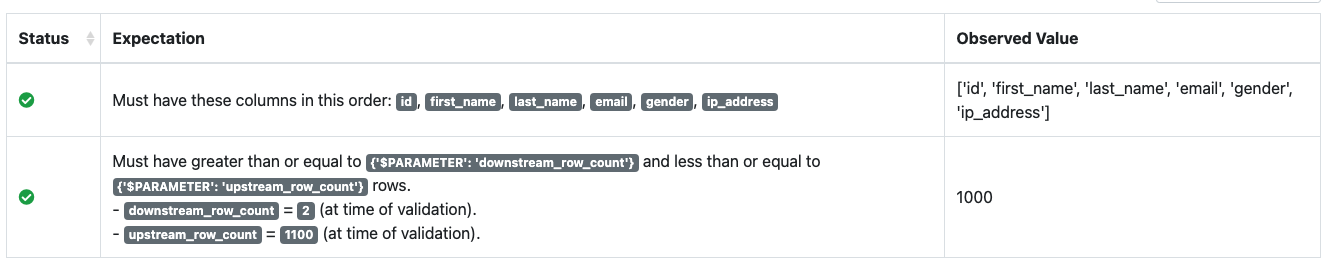

If your Evaluation Parameter was executed successfully, you'll see something like this:

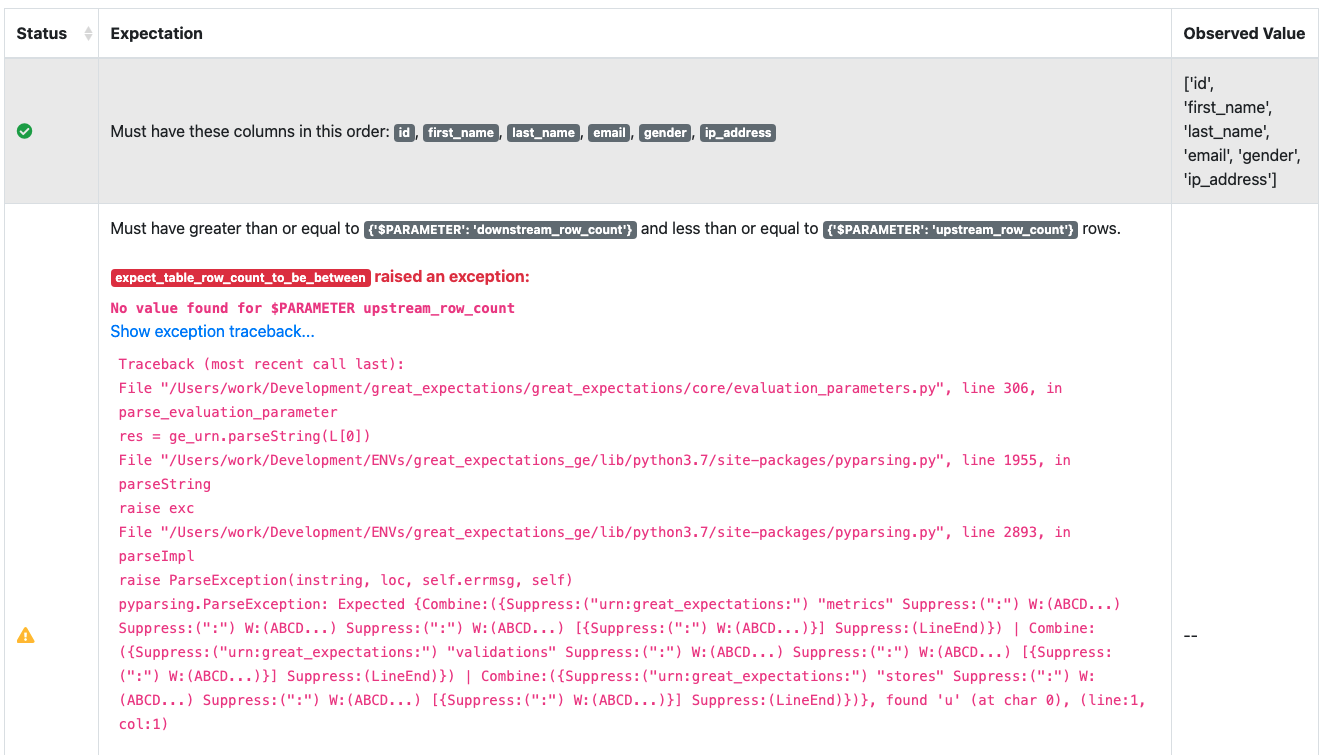

If it encountered an error, you'll see something like this. The most common problem is a mis-specified URN name.