Validate unstructured data with GX Cloud

Enterprise data often consists of large amounts of unstructured data such as PDFs, images, emails, and sensor logs, but many often find it difficult to validate the quality of it. Data quality issues related to unstructured data can often go unnoticed, leading to downstream problems. For example, an AI model may be compromised if duplicate documents and failed OCR (Optical Character Recognition) do not get immediately flagged, leading to poor outputs.

This tutorial provides a working, hands-on example of how to validate unstructured data using sample PDF data and GX Cloud. An OCR process on a PDF doesn't just extract text; it produces metadata like confidence scores, and word counts. GX Cloud allows you to set up data quality checks on this metadata to maximize the confidence in your unstructured data, all while allowing your collaborators to view the results.

Prerequisite knowledge

This article assumes basic familiarity with GX components and workflows. If you're new to GX, start with the GX Cloud and GX Core overviews to familiarize yourself with key concepts and setup procedures.

Prerequisites

- Python version 3.10 to 3.13

- A GX Cloud account

- Your Cloud credentials saved in your environment variables

Install dependencies

-

Open a terminal window and navigate to the folder you want to use for this tutorial.

-

Install poppler and tesseract. Poppler is a PDF rendering library that this tutorial uses to read the PDFs. Tesseract is an open source OCR engine that this tutorial uses to perfrom OCR on the PDFs.

Terminal inputbrew install poppler

brew install tesseract -

Optional. Create a Python virtual environment and start it.

Terminal inputpython -m venv my_venv

source my_venv/bin/activate -

Install the Python libraries that you will use in this tutorial, including the Great Expectations library.

Terminal inputpip install pandas

pip install datasets

pip install pdf2image

pip install pytesseract

pip install great_expectations

Import the required Python libraries

-

Create the Python file for this project.

Terminal inputtouch gx_unstructured_data.py -

Open the Python file in your code editor of choice.

-

Import the libraries you will be using for data validation in this tutorial.

Pythonimport pandas as pd # Data manipulation

import great_expectations as gx # Data validation

import great_expectations.exceptions.exceptions as gxexceptions # For exceptions

import great_expectations.expectations as gxe # For Expectations

Load the dataset and convert it into a dataframe

This tutorial uses an open source dataset of PDFs from Hugging Face. You will convert the first page of the first 5 PDFs into an image, run OCR on that page, and finally extract the metrics from it.

-

Load the dataset.

Pythonfrom datasets import load_dataset # Load PDF OCR dataset from Hugging Face

ds = load_dataset("broadfield-dev/pdf-ocr-dataset", split="train[:5]") -

Iterate through the PDFs, converting the first page into an image before running OCR and storing the metrics.

Pythonimport pytesseract # OCR engine

import requests

from pdf2image import convert_from_bytes # Convert PDF pages to images

from pytesseract import Output # Structured OCR output

records = []

for sample in ds:

# Download PDF from URL in 'urls' field

pdf_url = None

urls = sample.get("urls")

if isinstance(urls, list) and urls:

pdf_url = urls[0]

elif isinstance(urls, str):

pdf_url = urls

if not pdf_url:

print(f"No PDF URL found in sample: {list(sample.keys())}")

continue

response = requests.get(pdf_url)

if response.status_code != 200:

print(f"Failed to download PDF from {pdf_url}")

continue

pdf_bytes = response.content

print(f"Processing PDF: {sample.get('ids', 'unknown')}")

pages = convert_from_bytes(pdf_bytes, dpi=200)

all_ocr_text = []

all_confidences = []

all_heights = []

for image in pages:

# Run OCR on the PDFs

ocr_data = pytesseract.image_to_data(image, output_type=Output.DICT)

ocr_text = pytesseract.image_to_string(image)

all_ocr_text.append(ocr_text)

# Collect confidences and heights for each page

all_confidences.extend(

[

float(c)

for t, c in zip(ocr_data["text"], ocr_data["conf"], strict=False)

if t.strip() and c != "-1"

]

)

all_heights.extend(

[

int(h)

for t, h in zip(ocr_data["text"], ocr_data["height"], strict=False)

if t.strip()

]

)

full_text = "\n".join(all_ocr_text)

avg_conf = sum(all_confidences) / len(all_confidences) if all_confidences else 0

header_count = sum(1 for h in all_heights if h > 20)

# Store metrics for validation

records.append(

{

"file_name": sample.get("ids", "unknown"),

"text_length": len(full_text),

"ocr_confidence": round(avg_conf, 2),

"num_detected_headers": header_count,

}

) -

Convert the metrics into a dataframe for validation.

Pythondf = pd.DataFrame(records)

Connect to GX Cloud and define Expectations

In this tutorial, you will connect to your GX Cloud organization using the GX Cloud API. You will either get or create a pandas Data Source and a dataframe Data Asset. Batch Definitions both organize a Data Asset's records into Batches and provide a method for retrieving those records. The Batch Definition in this tutorial will use the whole dataframe that you created in the previous step.

-

Instantiate the GX Data Context and get or create the Data Source, Data Asset, and Batch Definition.

Pythoncontext = gx.get_context()

try:

datasource = context.data_sources.get("PDF Scans")

except KeyError:

datasource = context.data_sources.add_pandas("PDF Scans")

try:

asset = datasource.get_asset("OCR Results")

except LookupError:

asset = datasource.add_dataframe_asset("OCR Results")

try:

batch_definition = asset.get_batch_definition("default")

except KeyError:

batch_definition = asset.add_batch_definition_whole_dataframe("default") -

Get or create an Expectation Suite and create Expectations to validate the metrics generated from the PDFs. This tutorial utilizes the

ExpectColumnValuesToBeBetweenExpectation in order to validate that the metrics we stored in the dataframe meet our parameters. You can also try using different Expectations or value ranges.Pythontry:

suite = context.suites.get(name="OCR Metrics Suite")

except gxexceptions.DataContextError:

suite = gx.ExpectationSuite("OCR Metrics Suite")

suite = context.suites.add(suite)

suite.add_expectation(

gxe.ExpectColumnValuesToBeBetween(column="text_length", min_value=500)

) # at least 500 characters

suite.add_expectation(

gxe.ExpectColumnValuesToBeBetween(column="ocr_confidence", min_value=70)

) # at least 70% confidence

suite.add_expectation(

gxe.ExpectColumnValuesToBeBetween(column="num_detected_headers", min_value=2)

) # at least 2 headers

suite.save()

Validate your Expectations

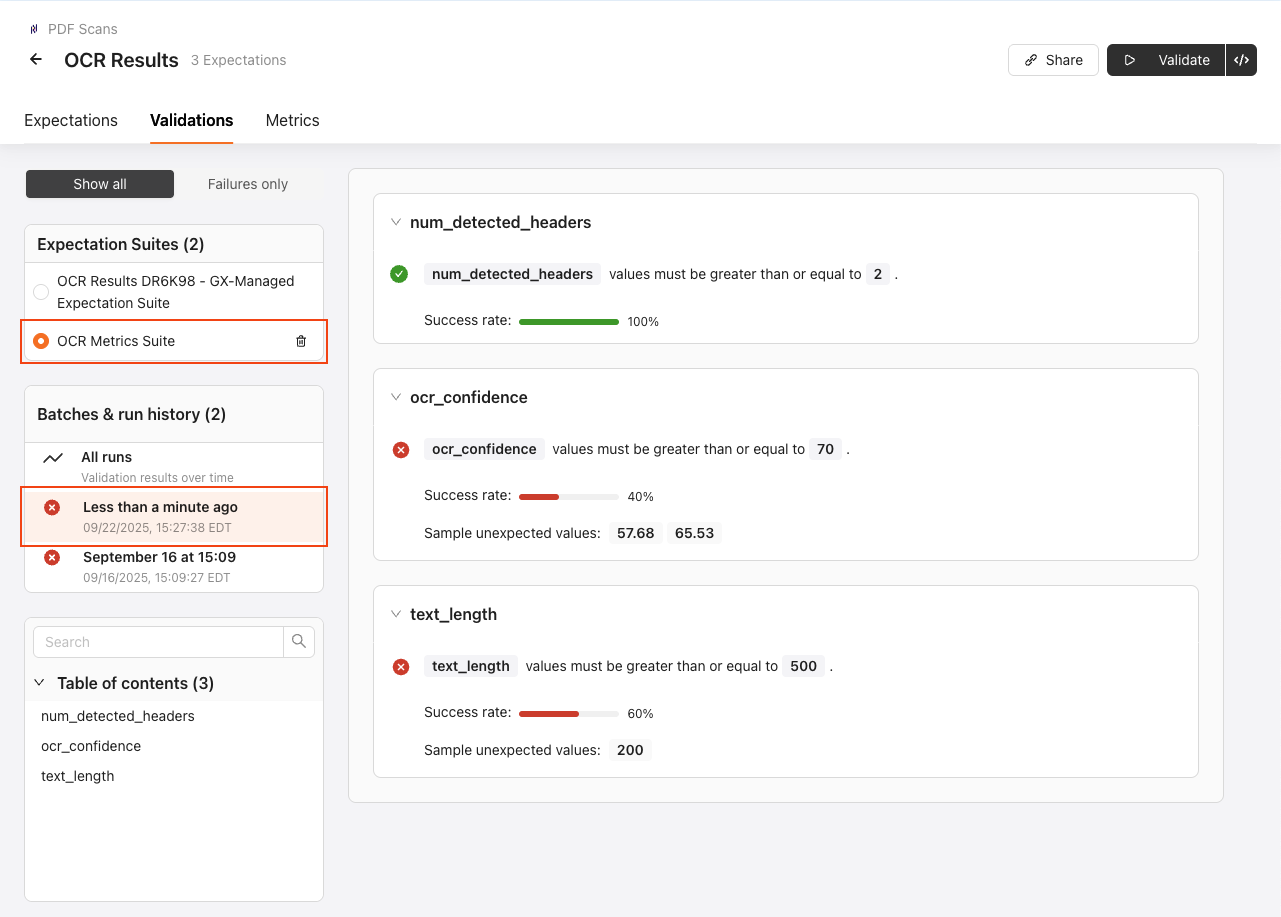

GX uses a Validation Definition to link a Batch Definition and Expectation Suite. A Checkpoint will be used to execute Validations. The results of the Validations can be later viewed through the GX Cloud UI.

-

Create the Validation Definition.

Pythontry:

vd = context.validation_definitions.get("OCR Results VD")

except gxexceptions.DataContextError:

vd = gx.ValidationDefinition(

data=batch_definition, suite=suite, name="OCR Results VD"

)

context.validation_definitions.add(vd) -

Create and run the Checkpoint.

Pythontry:

checkpoint = context.checkpoints.get("OCR Checkpoint")

except gxexceptions.DataContextError:

checkpoint = gx.Checkpoint(name="OCR Checkpoint", validation_definitions=[vd])

context.checkpoints.add(checkpoint)

checkpoint.run(batch_parameters={"dataframe": df})

Review the results

Now that you have set up the Data Source, Data Asset, Expectations, and have run the Checkpoint, the Validation Results can be viewed in the GX Cloud UI.

-

Log in to GX Cloud, navigate to the Data Assets page, and find the

OCR ResultsData Asset that we used earlier in the tutorial.

-

Click into the Data Asset and then to the Validations tab. Under Expectation Suites, select the

OCR Metrics Suitesuite that you created above, and then under Batches & run history, select the Validation you just ran.

The path forward

Using this tutorial as a framework, you can try plugging in your own unstructured data, as well as add other Expectations from the Expectation Gallery to the Expectation Suite. You can also explore validating your unstructured data within a data pipeline by using this code with an orchestrator.

Businesses that rely on unstructured data should take the steps necessary to ensure the quality of it, but this is only one of many data quality scenarios that is relevant to an organization. Explore our other data quality use cases for more insights and best practices to expand your data validation to encompass key quality dimensions.